CDP:Scale Out Pattern

Dynamically Increasing the Number of Servers

Contents |

Problem to Be Solved

To process high traffic volumes, you will need a high-specification server for the web server. The approach where a machine with a higher specification is used to increase the processing performance is known as "Scaling Up." However, this approach has problems. Typically, the higher the specification in a high-specification server, the higher the unit processing cost. Understand also that there is a limit to the server specifications – the specifications cannot be boundlessly high.

Explanation of the Cloud Solution/Pattern

The approach where multiple servers of identical specifications are provided in parallel to handle high traffic volumes is known as "Scale Out."

You launch multiple virtual servers, and use the Load Balancer to distribute the load to the individual virtual servers. Depending on your system, the traffic may increase drastically over weeks, days, or sometimes even hours. The AWS Cloud makes it easy for you to change dynamically the number of virtual servers that handle processing, making it possible to match such dramatic variations in traffic volume.

Implementation

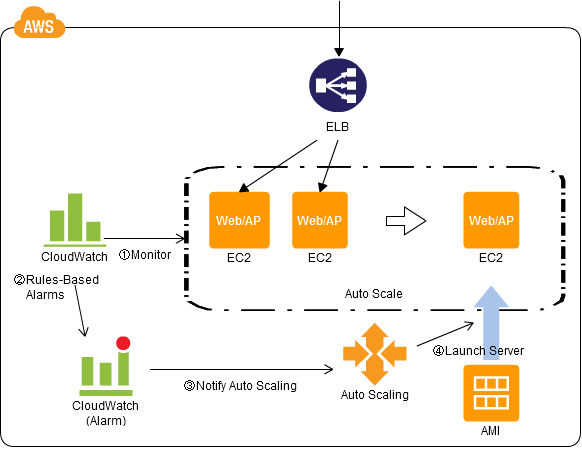

You can use a combination of three services: the load balancing service (Elastic Load Balancing (ELB)), the monitoring tool (CloudWatch), and the automatic scale-out service (Auto Scaling), to structure easily a system that scales up automatically depending on the load.

(Procedure)

- Set up multiple EC2 instances in parallel (as web/AP servers) under the control of ELB.

- Create an Amazon Machine Image (AMI) to be used when starting up a new EC2 instance.

- Define the conditions (metrics) to trigger an increase or decrease in the number of EC2 instances. The average CPU use rate of an EC2 instance, the amount of network traffic, the number of sessions, the Elastic Block Store (EBS) latency, and the like are often used.

- Use CloudWatch to monitor these metrics, and set up to issue an alarm if specific conditions are satisfied.

- Set up so that Auto Scaling will increase or decrease the number of EC2 instances when an alarm is received. <ref group="Related Blog"> Template:Related Blog </ref> * Completing the set up as described above makes it possible for you to, for example, start up two EC2 instances using an AMI that has been prepared in advance when the average CPU use rate has been greater than 70% for at least five minutes continuously. Of course, you can reduce the number of servers to match the circumstances.

Configuration

Benefits

- This provides service continuity because the number of EC2 instances is increased automatically in response to an increase in traffic.

- This allows you to reduce costs, because the number of EC2 instances is reduced when there is little traffic.

- This reduces your workload, as an administrator, because the number of EC2 instances is increased or decreased automatically in response to changes in traffic level.

- When compared to scale-up, the limit on processing capability is extremely high because the required number of EC2 instances can be provided in parallel, under the control of the ELB.

Cautions

- This cannot handle severe or rapid variations in traffic such as the traffic doubling or tripling over several minutes. This is because, in practice, the actual increase in the number of EC2 instances takes some time after the need to do so is identified. In this case, you should schedule an increase in the number of EC2 instances at specific times. Provide enough extra EC2 instances in advance to be able to handle the load, and then delete the unneeded EC2 instances.

- Consider whether to leave HTTP session management, SSL processes, and the like, to the ELB, or whether to process these in controlled servers.

- Because the ELB is not provided with a system for changing the amount of load distribution depending on the specifications, the controlled EC2 instance types should all be identical.

- When compared to scale-out on the web/AP server layer, typically scale-out on the DB server layer is more difficult. See the Relational Database Pattern.

- To increase the fault tolerance, you should distribute the scale-out across multiple Availability Zones (AZs). See the Multi-Datacenter Pattern. At this time, the incremental number of instances should be a multiple of the number of AZs to enable equal distribution to each of the AZs.

- When making the first SSH connection to a newly-launched EC2 instance, in some cases a fingerprint validation will be performed interactively to validate the host at the time of logging in, in which case functions such as automatic processing using SSH from the outside will be disabled.

- When an update is required within an EC2 instance, you will need to also update the Amazon Machine Image (AMI) that was the source for launching in Auto Scaling.

Other

- See the Clone Server Pattern, NFS Sharing Pattern, and NFS Replica Pattern regarding file sharing when performing Scaling Up.

- See the State Sharing Pattern in regard to session administration. See the Scheduled Scaleout Pattern.